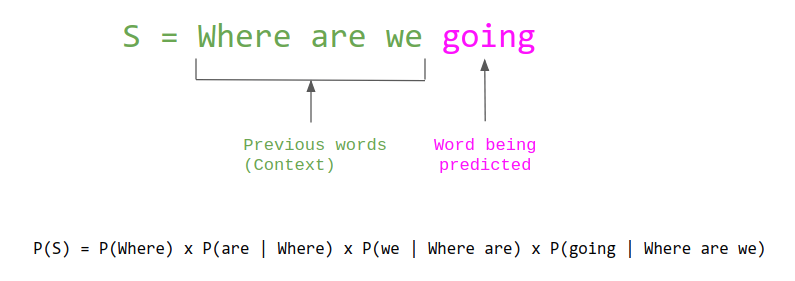

language models

see: https://magazine.sebastianraschka.com/p/understanding-large-language-models

completions

You can run completions using:

completionGPT(

system_prompt = "",

query = "",

model = "",

temperature = 0,

openai_api_key = ""

)The system_prompt tells the model how to act. For example, you might say system_prompt = "you are a helpful assistant".

The query is the question you want to ask. For example, you might say: query = "Below is some text from a scientific article, but I don't quite understand it. Could you explain it in simple terms? Text: The goal of the study presented was to compare Tanacetum balsamita L. (costmary) and Tanacetum vulgare L. (tansy) in terms of the antibacterial and antioxidant activity of their essential oils and hydroethanolic extracts, and to relate these activities with their chemical profiles. The species under investigation differed in their chemical composition and biological activities. The dominant compounds of the essential oils, as determined by Gas Chromatography-Mass Spectrometry (GC-MS), were β-thujone in costmary (84.43%) and trans-chrysanthenyl acetate in tansy (18.39%). Using High-Performance Liquid Chromatography with Diode-Array Detection (HPLC-DAD), the chemical composition of phenolic acids and flavonoids were determined. Cichoric acid was found to be the dominant phenolic compound in both species (3333.9 and 4311.3 mg per 100g, respectively). The essential oil and extract of costmary displayed stronger antibacterial activity (expressed as Minimum Inhibitory Concentration (MIC) and Minimum Bactericidal Concentration (MBC) values) than those of tansy. Conversely, tansy extract had higher antioxidant potential (determined by Ferric Reducing Antioxidant Power (FRAP) and DPPH assays) compared to costmary. In light of the observed antibacterial and antioxidant activities, the herbs of tansy and costmary could be considered as promising products for the pharmaceutical and food industries, specifically as antiseptic and preservative agents.

Available options for model include “gpt-4o”, gpt-4”, “gpt-4-0613”, “gpt-3.5-turbo”, “gpt-3.5-turbo-0613”, “gpt-3.5-turbo-16k”, “gpt-3.5-turbo-16k-0613”, “text-davinci-003”, “text-davinci-002”, “text-curie-001”, “text-babbage-001”, “text-ada-001”. Note that you may hot have API access to gpt-4 unless you requested it from OpenAI.

Temperature goes from 0 to 2 and adds randomness to the answer. Though that is a highly oversimplified and somewhat inaccurate explanation.

You have to provide your OpenAI API key.

prompting strategies

Role and Goal-Based Constraints: These constraints narrow the AI’s response range, making it more appropriate and effective. Leveraging the AI’s pre-trained knowledge, they guide conversation within a specific persona.

Step-by-Step Instructions: Clarity and organization in instructions are crucial. It’s recommended to use simple, direct language and to break down complex problems into steps. This approach, including the “Chain of Thought” method, helps the AI follow and effectively respond to the user’s request.

Expertise and Pedagogy: The user’s knowledge and perspective play a vital role in guiding the AI. The user should have a clear vision of how the AI should respond and interact, especially in educational or pedagogical settings.

Constraints: Setting rules or conditions within prompts helps guide the AI’s behavior and makes its responses more predictable. This includes defining roles (like a tutor), limiting response lengths, and controlling the flow of conversation.

Personalization: Using prompts that solicit information and ask questions can help the AI adapt to different scenarios and provide more personalized responses.

Examples and Few-Shot Learning: Providing the AI with a few examples helps it understand and adapt to new tasks better than with zero-shot learning.

Asking for Specific Output: Experimenting with different types of outputs, such as images, charts, or documents, can leverage the AI’s capabilities.

Appeals to Emotion: Recent research suggests that adding emotional phrases to requests can improve the quality of AI responses. Different phrases may be effective in different contexts.

Testing and Feedback: It’s important to test prompts with various inputs and perspectives to ensure they are effective and helpful. Continuous tweaking based on feedback can improve the prompts further.

Sharing and Collaboration: Sharing structured prompts allows others to learn and apply them in different contexts, fostering a collaborative environment for AI use.